Why you should use a service mesh with microservices

Still on the fence about service mesh? See how it supports a microservices architecture and application deployments, along with the other benefits it brings to an organization.

Microservices and distributed applications require instantaneous network communications and complex orchestrations to ensure high performance and fast scalability. The levels of intricacy require full management automation, since the complexity surpasses the abilities of IT administrators to define controls manually, such as load balancing, routing rules, service discovery and authentication.

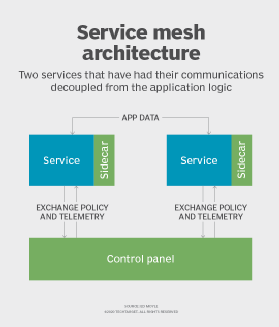

A service mesh provides platform-level automation and ensures communication between containerized application infrastructures. Service mesh tools ease operational management and handle the east-to-west traffic of remote procedure calls, which originate within the data center and travel between services.

In contrast, an API gateway facilitates external north-south interactions and handles external communications that enter an endpoint or service within the data center. Through its flexible release process, a service mesh enables organizations to run microservices at scale and ensures high availability, resiliency and secure communications.

Let's explore the benefits and advantages of a service mesh approach, examine use cases and consider possible limitations.

Key service mesh components and history

Organizations that deploy large-scale applications composed of microservices use service mesh to handle requests and scale services instantly. Dynamic service discovery enables that expansion and requires both a container orchestration system -- such as Kubernetes -- and API-driven network proxies to route traffic.

Sidecar proxies function within the service mesh data plane and enable fast information exchanges between microservices. The data plane is involved with every packet and request in the system and enables observability, health checks, routing and load balancing.

Management processes on the network control plane coordinate proxy behavior through APIs. These sophisticated routing capabilities are a critical aspect of distributed application performance and seamless data transfers. Human operators use APIs to manage traffic control, network resiliency, security and authentication.

The ride-hailing company Lyft is an example of the potential in a service mesh approach. In 2016, the company teamed up with IBM and Google to create Istio and moved its monolithic applications to service-oriented architecture. As a Kubernetes-native mesh, Istio manages service traffic flow, enforces access policies and aggregates telemetry data in the form of metrics, logs and traces.

Key elements within the Istio service mesh include Envoy, a high-performance proxy for inbound and outbound traffic, along with the Jaeger UI for visualizing traces and debugging. Components of Istio and service mesh technology date back to the early 2000s when Google first introduced gRPC (remote procedure call), Google Frontend and Global Software Load Balancer, in addition to fabric utilities from Netflix and Twitter.

Service mesh benefits

An organization with an established CI/CD pipeline and DevOps processes can use a service mesh to programmatically deploy applications and infrastructures, improve code management and, by extension, upgrade network and security policies.

Organizations with large applications composed of many microservices can benefit from a service mesh approach. For example, businesses in healthcare and financial services can deploy a service mesh to consistently enforce and enhance security and compliance policies. In addition to encrypting traffic, adding zero-trust networks and authenticating identity effectively, organizations can use a service mesh to respond to customer demands and competitive threats quickly.

Consistent application performance is critical for every organization. A high-functioning service mesh improves observability through traces and metrics that IT teams can use to identify root cause failures and ensure application resiliency. In addition to managing the communications layer, an effective service mesh supports advanced security features.

The clear advantages and key benefits to service mesh deployments enable companies to do the following:

- Increase release flexibility. Organizations can exercise greater control over both their testing procedures and deployments.

- Ensure high availability and fault tolerance. Businesses can deploy a service mesh to enable setup retries and failover and to test code paths through fault injections.

- Maintain secure communications. Chief information security officers and IT teams can authenticate, authorize and encrypt service-to-service communications. For example, service meshes are useful to manage service encryption via mutual Transport Layer Security and ensure secure connections.

- Gain greater visibility. Service mesh deployments ensure observability and monitoring in the form of latency metrics, distributed tracing support and real-time service-to-service monitoring.

Service mesh limitations

As microservices become more widespread, dynamic service discovery offsets communication failures and helps enforce network policies. However, the added operational complexity can create observability deficits. For example, the possibility for latencies, while often minuscule, result from the extra service hops required when services communicate.

Organizations must spend considerable time and effort to isolate performance malfunctions and perform manual code changes for services they interact with. Diminished end-to-end visibility also results when a company does not own the code for a particular service and lacks key performance information and indicators.

The rise in microservices adoptions and cloud-native applications means service mesh technology is rapidly becoming a foundational element. And, while operations teams are responsible for service mesh deployments, they must work closely with development teams to configure service mesh properties. The next stage is the possibility of service meshes becoming available in modern PaaS offerings.