chaos engineering

What is chaos engineering?

Chaos engineering is the process of testing a distributed computing system to ensure that it can withstand unexpected disruptions. It relies on concepts underlying Chaos theory, which focus on random and unpredictable behavior. The goal of chaos engineering is to identify weakness in a system through controlled experiments that introduce random and unpredictable behavior.

A main benefit of chaos engineering is that organizations can use it to identify vulnerabilities before a hacker does or before a system failure. Changes made as a result of chaos engineering testing increase confidence in an organization's systems.

Some IT groups hold chaos engineering game days where teams try to break or breach systems. They use failure mode and effective analysis or other tactics to get insight into potential points of failure in their organization's systems.

The concepts behind chaos engineering

The main concept behind chaos engineering is to break a system on purpose to collect information that will help improve the system's resiliency. Chaos engineering is an approach to software testing and quality assurance. It is well suited to modern distributed systems and processes.

Chaos engineering is particularly applicable to distributed computing environments. A distributed computing system is a group of computers linked over a network and sharing resources. These systems can break when unexpected situations occur. With large distributed systems, the components often have complex and unpredictable dependencies, and it is difficult to troubleshoot errors or predict when an error will occur.

There are many ways a distributed system can fail. Their size and complexity can cause seemingly random events to occur. The bigger and more complex the system, the more unpredictable and chaotic its behavior appears.

Chaos engineering experiments intentionally generate turbulent conditions in a distributed system to test the system and find weaknesses. Some example of problems a chaos experiment might uncover include:

- Blind spots. Places where monitoring software cannot gather adequate data.

- Hidden bugs. Glitches or other issues that can cause software to malfunction.

- Performance bottlenecks. Situations where efficiency and performance could be improved.

As more companies move to the cloud or the enterprise edge, their systems are becoming more distributed and complex. The same can be said about software development methodologies where continuous delivery is emphasized. Those development processes are getting increasingly complex as well. As an organization's infrastructure and processes for working within that infrastructure become more complex, the need to adapt to chaos grows.

How chaos engineering works

Chaos engineering is similar to stress testing in that it aims to identify and correct system or network issues. Unlike stress testing, chaos engineering doesn't test and correct one component at a time.

Chaos engineering examines problems that have a seemingly infinite number of possible causes. It looks beyond the obvious issues and tests distributed systems against problems or sets of problems that are less likely to happen. The goal is to gain new knowledge about the system.

The process is typically divided into several steps:

- Set the baseline. Start by establishing a baseline. The testers must identify how the system should operate under optimal conditions and specify what constitutes a normal working state.

- Create a hypothesis. Consider one or more potential weaknesses and formulate a hypothesis about the effects of those weaknesses. For example, software testers might want to know what will happen if a large traffic spike occurs.

- Test. Conduct experiments to gauge the consequences of a large spike. The experiments might reveal an error in a critical process or an unexpected cause-and-effect relationship. For example, a traffic spike simulation might reveal a storage performance issue.

- Evaluate. Measure and evaluate how the hypothesis holds up and determine which problems to fix.

Chaos engineering teams take an ordered approach in their experiments, testing the following:

- The things they are aware of and understand.

- The things they are aware of but don't fully understand.

- The things they understand but are not aware of.

- The things they are not fully aware of and do not fully understand.

They use "what if" scenarios that can trigger faults and failures to evaluate the performance and integrity of the system.

Advanced principles of chaos engineering

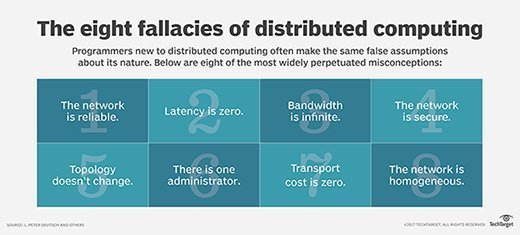

Computer scientist L. Peter Deutsch and his colleagues at Sun Microsystems developed a list of eight fallacies of distributed computing. These are false assumptions that programmers and engineers often make about distributed systems. They are a good starting point when applying chaos engineering to a problem. The eight fallacies include:

- The network is reliable.

- There is zero latency.

- Bandwidth is infinite.

- The network is secure.

- Topology never changes.

- There is one admin.

- Transport cost is zero.

- The network is homogeneous.

There is debate as to whether these fallacies are still fallacies, but chaos engineers continue to use them as core principles in understanding system and network problems. The theme underlying them is that systems and network are never perfect or 100% reliable. Because of this, we have the concept of "five nines" for highly available systems. Instead of striving for 100% availability, the closest engineers can get to perfection is 99.999%.

These false assumptions are easy to make in distributed computing environments, and they are the basis of the seemingly random problems that arise out of complex distributed systems.

Chaos engineering best practices

Chaos engineering is complicated. Following these best practices can help avoid problems that stem from the fallacies listed above:

- Understand the usual behavior of the system. Having a solid understanding of the system when it is healthy will help in diagnosing problems.

- Simulate realistic scenarios. Focus on injecting likely failures and bugs. For example, if latency has been a problem in the past, inject bugs that induce latency.

- Test using real-world conditions. This yields the most accurate results. Chaos engineering is often performed in production environments, especially when it is too cumbersome or expensive to duplicate a large, distributed system for testing purposes.

- Minimize the blast radius. Chaos engineering can be highly disruptive. Success demands coordination among IT staff, developers and business units. Experiments in production environments are rarely run at peak times, and ideally, nobody using the system will be able to tell that chaos experiments are taking place. Redundancy should be in place to ensure that services remain available if experiments do cause issues.

Examples of chaos engineering

Imagine a distributed system that can handle a certain number of transactions per second. Chaos engineering testing can be used to find out how the software would respond when that transaction limit is reached. Does performance suffer or would the system crash?

Chaos engineering can also be used to test how the distributed system behaves when it experiences a shortage of resources or single point of failure. If the system fails, developers can implement design changes. Once changes are made, the test is repeated to verify the desired results.

One notable real-world system failure had a chaos engineering connection. In 2015, Amazon's DynamoDB experienced an availability issue in one of its regional zones. That lapse caused over 20 Amazon Web Services that relied on DynamoDB to fail in that region. Sites that used the services -- including Netflix -- were down for several hours. However, Netflix experienced less of a failure than other sites, because it had created and used a chaos engineering tool called Chaos Kong to prepare for such a scenario.

Chaos Kong disables entire AWS availability zones, which are the AWS data centers that serve a geographical region. Using the tool had given Netflix experience responding to regional outages like the one the DynamoDB issue caused. The company's ability to deal with the outage is often cited in explaining the importance of chaos engineering.

Chaos engineering tools

Netflix was a notable pioneer of chaos engineering and was among the first to use it in production systems. Netflix designed and open sourced chaos test automation platforms collectively dubbed the Simian Army.

There are several tools included in the Simian Army suite, including:

- Chaos Kong. Disables entire AWS availability zones.

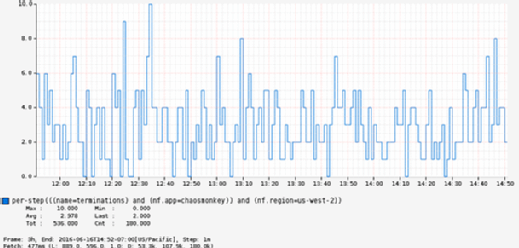

- Chaos Monkey. Randomly disables production environment instances to cause a system failure but is designed to not have effects on customer activity.

- Chaos Gorilla. Like Chaos Monkey but on a larger scale.

- Latency Introduces latency to simulate network outages and degradation.

The Netflix Simian Army continues to grow as more chaos-inducing programs are created to test the streaming service's capabilities. Some other chaos engineering tools include:

Simoorg. An open source failure-inducing program. LinkedIn uses this program to perform chaos engineering experiments.

Monkey-Ops. An open source tool implemented in Go and built to test and terminate random components and deployment configurations.

Gremlin. A chaos engineering program that works with AWS and Kubernetes and focuses on the retail and finance sectors. It comes with built-in redundancy that stops chaos engineering experiments when they threaten the system.

AWS Fault Injection Simulator. Includes fault templates that AWS can inject into production instances. The platform has built-in redundancy and protective measures to keep the failure injection testing from causing system problems.

Testing, resilience and quality assurance in modern DevOps software development environments is crucial. Learn best practices for testing in DevOps implementations where continuous delivery and experimentation is a priority.