Getty Images

The evolution of containers: Docker, Kubernetes and the future

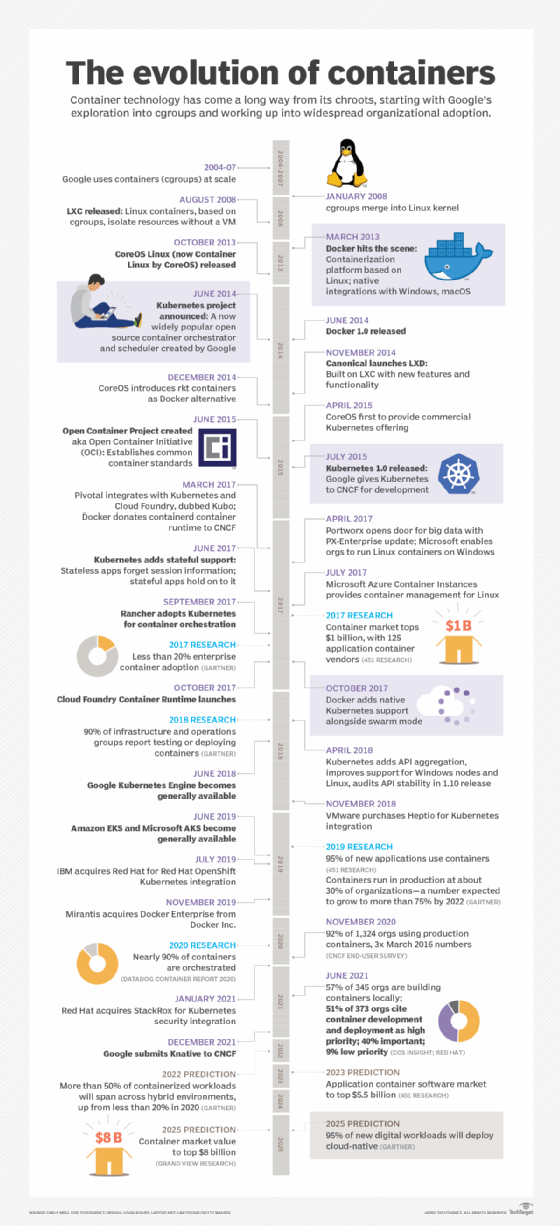

Container technology is almost as old as VMs, although IT wasn't talking about the topic until Docker, Kubernetes and other tech made waves that caused a frenzy of activity.

The rapid evolution of containers over the past two decades has changed the dynamic of modern IT infrastructure -- and it began before Docker's debut in 2013.

VM partitioning, which dates to the 1960s, enables multiple users to access a computer concurrently with full resources via a singular application each. The following decades were marked by widespread VM use and development. The modern VM serves a variety of purposes, such as installing multiple OSes on one machine to host multiple applications with specific OS requirements that differ from each other.

Then there was chroot

The evolution of containers leaped forward with the development of chroot in 1979 as part of Unix version 7. Chroot marked the beginning of container-style process isolation by restricting an application's file access to a specific directory -- the root -- and its children. A key benefit of chroot separation was improved system security, such that an isolated environment could not compromise external systems if an internal vulnerability was exploited.

The 2000s were alight with container technology development and refinement. Google introduced Borg, the organization's container cluster management system, in 2003. It relied on the isolation mechanisms that Linux already had in place.

In those early days of the evolution of containers, security wasn't much of a concern. Anyone could see what was going on inside the machine, which enabled a system of accounting for who was using the most memory and how to make the system perform better.

Nevertheless, this kind of container technology could only go so far. This led to the development of process containers, which became control groups, or cgroups, as early as 2004. Cgroups noted the relationships between processes and reined in user access to specific activities and memory volumes.

The cgroup concept was absorbed into the Linux kernel in January 2008, after which the Linux container technology LXC emerged. Namespaces developed shortly thereafter to provide the basis for container network security: to hide a user's or group's activity from others.

Docker and Kubernetes

Docker floated onto the scene in 2013 with an easy-to-use GUI and the ability to package, provision and run container technology. Because Docker enabled multiple applications with different OS requirements to run on the same OS kernel in containers, IT admins and organizations saw opportunity for simplification and resource savings.

Within a month of its first test release, Docker was the playground for 10,000 developers. By the time Docker 1.0 was released in 2014, the software had been downloaded 2.75 million times, and within a year after that, more than 100 million.

Compared with VMs, containers have a significantly smaller resource footprint, are faster to spin up and down, and require less overhead to manage. VMs must also each encapsulate a fully independent OS and other resources, while containers share the same OS kernel and use a proxy system to connect to the resources they need, depending on where those resources are located.

Concern and hesitation arose in the IT community regarding the security of a shared OS kernel. A vulnerable container could result in a vulnerable ecosystem without the right precautions baked into the container technology.

Additional complaints early in the modern evolution of containers bemoaned the lack of data persistence, which is important to the vast majority of enterprise applications. Efficient networking also posed problems, as well as the logistics of regulatory compliance and distributed application management.

Container technology ramped up in 2017. Companies such as Pivotal, Rancher, AWS and even Docker changed gears to support the open source Kubernetes container scheduler and orchestration tool, cementing its position as the default container orchestration technology.

In April 2017, Microsoft enabled organizations to run Linux containers on Windows Server. This was a major development for Microsoft shops that wanted to containerize applications and stay compatible with their existing systems.

Over time, container vendors have addressed security and management issues with tool updates, additions, acquisitions and partnerships, although that doesn't mean containers are perfect in the 2020s.

Modern containers and concerns

Cloud container management, accompanied by the necessary monitoring, logging and alert technology, is an active area for container-adopting organizations. Containers offer more benefits for distributed applications -- particularly microservices -- than for larger, monolithic ones. Each service can be fully contained and scaled independently from others with the help of an orchestrator tool such as Kubernetes, which reduces resource overhead on applications with features that aren't as heavily used as others.

To that end, various public and private cloud providers offer managed container services -- usually via Kubernetes, such as Azure Kubernetes Service and Google Kubernetes Engine -- to make container deployment in the cloud more streamlined, scalable and accessible by administrators. AI and machine learning technologies are attracting similar interest and participation among enterprises both on and off the cloud for improved metrics and data analysis, as well as error prediction, automated alerts and incident resolution.

And the evolution of container technology has not come to an end. Mirantis, a cloud computing services company, purchased Docker Enterprise in 2019, and Kubernetes has long since cemented its position as the de facto container orchestration standard. Other major vendors regularly acquire smaller startups to bolster their toolchain offerings and fill in gaps with specialized tools.

A 2019 Diamanti survey of more than 500 IT organizations revealed that security was users' top challenge with the technology, followed by infrastructure integration. Sysdig noted a 100% increase in container density from 2018 to 2019 in its container use survey.

Since 2020, there have been an array of acquisitions designed to improve container and Kubernetes security. SUSE acquired Rancher in December 2020 and NeuVector in late 2021; Cisco acquired Portshift in October 2020; and Red Hat acquired StackRox in January 2021.

The heightened focus on security is reflected in the focus of recent containerization usage reports in 2022:

- Sysdig's "2022 Cloud-Native Security and Usage Report" found that 75% of respondents were running containers with high or critical vulnerabilities, and 76% had containers running as root, playing a dangerous game with IT ecosystem safety.

- In Red Hat's "2022 State of Kubernetes Security Report," 93% of respondents reported experiencing at least one Kubernetes-related security incident within the prior 12 months, and 31% experienced revenue or customer loss as a result.

- In VMware's "The State of Kubernetes 2022" report, 36% of respondents said data security, protection and encryption were the most important tools and capabilities for production Kubernetes use. Nearly all those surveyed (97%) had concerns about Kubernetes security, especially multi-cluster and multi-cloud deployments.

The future of containers

No matter how container technology evolves, we'll see more of it. In 2018, analyst firm Gartner predicted enterprise container use would reach up to 50% adoption in 2020 -- more than double the 20% recorded in 2017.

Actual recorded adoption in 2020 was still under 30%, according to a Gartner press release in June of that year. But by 2021, 96% of participating organizations were using Kubernetes and container technology or were evaluating the technology, according to the 2021 annual survey from the Cloud Native Computing Foundation, which demonstrates a major jump in enterprise containerization use.

Gartner predicted in 2020 that more than 75% of organizations would run containers in production by 2022. In 451 Research's 2022 "Voice of the Enterprise" survey, more than 58% of respondents said they were actively adopting containers, with an additional 31% in proof-of-concept stages or planning trials. If that 31% all complete successful adoptions, that brings the adoption rate higher than Gartner's 2020 prediction.

Skills remain a hindrance

There's no way to talk about the future of containers without addressing the skills gap across the IT industry. Organizations across most, if not all, verticals worldwide are struggling to find IT pros with the right mix of skills -- and the problem worsens in parallel with advancing role requirements.

For many organizations, containers are the path to the future, but it's difficult to manage a containerized environment if there aren't enough container-savvy professionals to oversee it. Containerized systems tend to automate the basic tasks that would typically fall to entry-level admins, but reducing or eliminating those tasks raises the bar for necessary skills, which can be especially difficult to acquire for newcomers. This dearth of the most-needed skills is containerization technology's tallest hurdle to clear, and there's no end -- or tangible predictions of an end -- in sight.