Kubernetes

What is Kubernetes?

Kubernetes, also referred to as K8s for the number of letters between K and S, is an open source platform used to manage containerized applications across private, public and hybrid cloud environments. Organizations can also use Kubernetes to manage microservice architectures. Containers and Kubernetes are deployable on most cloud providers.

Application developers, IT system administrators and DevOps engineers use Kubernetes to automatically deploy, scale, maintain, schedule and operate multiple application containers across clusters of nodes. Containers run on top of a common shared operating system (OS) on host machines but are isolated from each other unless a user chooses to connect them.

What is Kubernetes used for?

Enterprises primarily use Kubernetes to manage and federate containers, as well as to manage passwords, tokens, Secure Shell (SSH) keys and other sensitive information. But enterprises also find Kubernetes useful in other cases, including the following:

- Orchestrate containers. Kubernetes primarily serves as a platform for orchestrating containers. It automates deployment, scaling and management of containerized applications, letting developers focus on writing code rather than managing infrastructure.

- Enhance service discovery. Organizations can use Kubernetes to automatically detect and customize service discovery for containerized applications across a network.

- Manage hybrid cloud and multi-cloud. Kubernetes can help businesses extend on-premises workloads into the cloud and across multiple clouds. Hosting nodes in multiple clouds and availability zones or regions increases resiliency and provides flexibility for a business to choose different service configuration options.

- Expand PaaS options. Kubernetes can support serverless workloads, which could eventually give rise to new types of platform-as-a-service (PaaS) options from improved scalability and reliability to more granular billing and lower costs.

- Wrangle data-intensive workloads. Google Cloud Dataproc for Kubernetes service lets IT teams run Apache Spark jobs, which are large-scale data analytics apps.

- Extend edge computing. Organizations that already run Kubernetes in their data centers and clouds can use it to extend those capabilities out to edge computing environments. This could involve small server farms outside a traditional data center -- or in the cloud -- or an industrial internet of things (IoT) model. Edge computing and IoT components can be tightly coupled with application components in the data center, so Kubernetes can help maintain, deploy and manage them.

- Microservices architecture. Kubernetes frequently hosts microservices-based systems. It offers the tools required to handle major issues with microservice architectures, including fault tolerance, load balancing and service discovery.

- DevOps practices. Kubernetes plays a key role in DevOps practices by providing a quick way to develop, deploy and scale applications. Its support for continuous integration/continuous development pipelines makes it possible to deliver software more quickly and effectively.

Common Kubernetes terms

The following basic terms can help users grasp how Kubernetes and its deployment work:

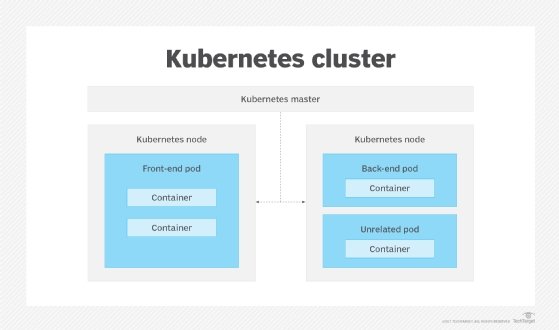

- Cluster. The foundation of the Kubernetes engine. Containerized applications run on top of clusters. It's a set of machines on which applications are managed and run.

- Node. Worker machines that make up clusters.

- Pod. Groups of containers that are deployed together on the same host machine.

- Replication controller. An abstract used to manage pod lifecycles.

- Selector. A matching system used for finding and classifying specific resources.

- Label. Value pairs used to filter, organize and perform mass operations on a set of resources.

- Annotation. A label with a much larger data capacity.

- Ingress. An application program interface (API) object that controls external access to services in a cluster -- usually HTTP. It offers name-based virtual hosting, load balancing and Secure Sockets Layer.

How does Kubernetes infrastructure work?

Kubernetes infrastructure includes multiple elements that help users deploy and administrate containerized applications. This encompasses physical or virtual servers, cloud platforms and other relevant components.

The following is a quick dive into Kubernetes container management, its components and how it works.

Pods

Pods are comprised of one or multiple containers located on a host machine and the containers can share resources. Kubernetes finds a machine that has enough free compute capacity for a given pod and launches the associated containers. To avoid conflicts, each pod is assigned a unique Internet Protocol address (IP address), which lets applications use ports.

Node agent

A node agent, called a kubelet, manages the pods, their containers and their images. Kubelets also automatically restart a container if it fails. Alternatively, Kubernetes APIs can be used to manually manage pods.

ReplicationController

A Kubernetes ReplicationController manages clusters of pods using a reconciliation loop to push for a desired cluster state. This ensures that the requested number of pods run to the user's specifications. It can be used to create new pods if a node fails or to manage, replicate and scale up existing pods.

The ReplicationController scales containers horizontally and ensures there are an adequate number of containers available as the overall application's computing needs fluctuate. In other cases, a job controller can manage batch work, or a DaemonSet controller can be used to manage a single pod on each machine in a set.

Other Kubernetes infrastructure elements and their primary functions include the following:

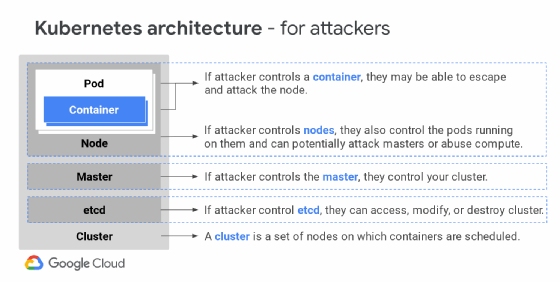

Security

The master node runs the Kubernetes API and controls the cluster. It serves as part of the control plane and manages communications and workloads across clusters.

A node, also known as a minion, is a worker machine in Kubernetes. It can be either a physical machine or a virtual machine (VM). Nodes have the necessary services to run pods and receive management instructions from master components. Services found on nodes include Docker, kube-proxy and kubelet.

Security is broken into four layers: Cloud or Data Center, Cluster, Container and Code. Stronger security measures continue to be created, tested and deployed regularly.

Telemetry

An abstraction called service is an automatically configured load balancer and integrator that runs across the cluster. Labels are key-value pairs used for service discovery. A label tags the containers and links them together into groups.

Networking

Kubernetes is all about sharing machines between applications. As each pod gets its own IP address, this creates a clean, backward-compatible model. Pods can be treated as VMs in terms of port allocation, naming, service discovery, load balancing, application configuration and migration.

Registry

There's a direct connection between Amazon Elastic Container Registry (Amazon ECR) and Kubernetes. Each user in the cluster who can create pods can run any pods that use any images in the ECR registry.

Benefits of Kubernetes

Kubernetes lets users schedule, run and monitor containers, typically in clustered configurations, and automate related operational tasks.

Common benefits of Kubernetes include the following:

- Deployment. Kubernetes sets and modifies preferred states for container deployment. Users can create new container instances, migrate existing ones to them and remove the old ones. DevOps teams can use Kubernetes to set policies for automation, scalability and app resiliency, and quickly roll out code changes. Metrics used to guide Kubernetes deployment can also play into an AIOps strategy.

- Monitoring. Kubernetes continuously checks container health, restarts failed containers and removes unresponsive ones.

- Load balancing. Kubernetes is used to perform load balancing to distribute traffic across multiple container instances.

- Storage. Kubernetes can handle varied storage types for container data, from local storage to cloud resources.

- Optimization. It can add a level of intelligence to container deployments, such as resource optimization -- by identifying which worker nodes are available and which resources are required for containers, and automatically fitting containers onto those nodes.

- Security. Kubernetes is also used to manage passwords, tokens, SSH keys and other sensitive information.

- Availability. It can ensure high availability of containers by automatically restarting failed containers or rescheduling them on healthy nodes.

- Open source. Kubernetes is an open source project, which is continuously evolving and benefiting from contributions from a wide range of developers and organizations. Being highly extensible, it lets users customize and extend its functionality to meet specific requirements.

Challenges of using Kubernetes

Kubernetes often requires role and responsibility changes within an existing IT department as organizations decide which storage model to deploy -- a public cloud or on-premises servers. Challenges of using Kubernetes vary depending on the organization's size, number of employees, scalability and infrastructure.

Common challenges with Kubernetes include the following:

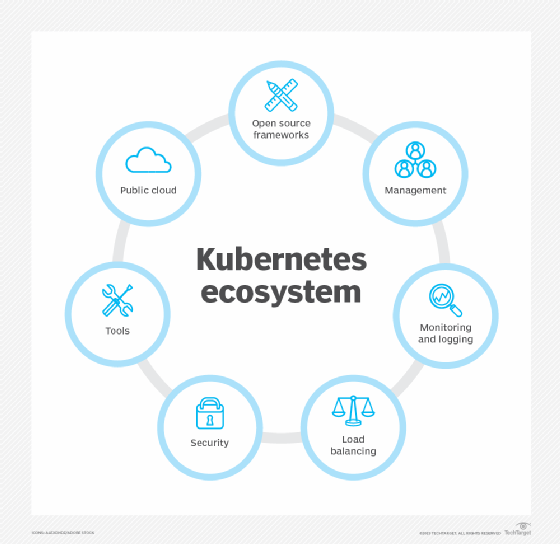

- Difficult DIY. Some enterprises desire the flexibility to run open source Kubernetes themselves if they have the skilled staff and resources to support it. Many others will choose a package of services from the broader Kubernetes ecosystem to help simplify its deployment and management for IT teams.

- Load scaling. Pieces of an application in containers can scale differently or not at all under load, which is a function of the application, not the method of container deployment. Organizations must factor in how to balance pods and nodes.

- Distributed complexity. Distributing application components in containers provides the flexibility to scale features up and down -- but too many distributed app components increase complexity and can affect network latency and reduce availability.

- Monitoring and observability. As organizations expand container deployment and orchestration for more workloads in production, it becomes harder to know what's going on behind the scenes. This creates a heightened need to better monitor various layers of the Kubernetes stack, and the entire platform, for performance and security.

- Nuanced security. Deploying containers into production environments adds many levels of security and compliance: vulnerability analysis on code, multifactor authentication and simultaneous handling of multiple stateless configuration requests. Proper configuration and access controls are crucial, especially as adoption widens and more organizations deploy containers into production. In 2020, Kubernetes launched a bug bounty program to reward those who find security vulnerabilities in the core Kubernetes platform.

- Vendor lock-in. Although Kubernetes is an open source platform, challenges can arise in terms of vendor lock-in when opting for managed Kubernetes services offered by cloud providers. The process of migrating from one Kubernetes service to another or overseeing multi-cloud deployments can introduce complexities.

Who are Kubernetes' competitors?

Kubernetes was designed as an environment to build distributed applications in containers. It can be adopted as the upstream, open source version or as a proprietary, supported distribution.

While Kubernetes is one of the leading orchestration tools, it's certainly not the only one. The orchestration landscape is dynamic and various alternatives and options are available for companies seeking to schedule and orchestrate containers.

Popular competitors of Kubernetes include the following:

- Docker Swarm. Docker Swarm, a standalone container orchestration engine for Docker containers, offers native clustering capabilities with lower barriers to entry and fewer commands than Kubernetes. Swarm users are encouraged to use Docker infrastructure but aren't blocked from using other infrastructures. In recent years, the two containerization technologies have begun to operate most efficiently when used together. Docker lets a business run, create and manage containers on a single OS. With Kubernetes, containers can then be automated for provisioning, networking, load balancing, security and scaling across nodes from a single dashboard. Mirantis acquired the Docker Enterprise business in late 2019 and initially intended to focus on Kubernetes, but later pledged to support and expand the enterprise version of Docker Swarm.

- Jenkins. Jenkins, also an open source tool, is a continuous integration server tool that offers easy installation, easy configuration and change set support, as well as internal hosting capabilities. Compared to Jenkins, Kubernetes, as a container tool, is more lightweight, simple and accessible. It's built for a multi-cloud world, whether public or private based.

- Nomad. Nomad, created by HashiCorp, is an open source cluster manager and scheduler. Emphasizing simplicity and flexibility, it lets users deploy and oversee applications across a machine cluster without requiring complex configurations.

Kubernetes support and enterprise product ecosystem

As an open source project, Kubernetes underpins several proprietary distributions and managed services from cloud vendors, including the following:

- Amazon Elastic Container Service. Amazon Web Services offers a fully managed container orchestration service called Amazon Elastic Container Service. It removes the complexity of Kubernetes from the deployment and administration of containers.

- Canonical Kubernetes. Canonical Kubernetes is a cloud-hosted Kubernetes that's built on the Ubuntu OS.

- Google Kubernetes Engine. GKE simplifies the management and deployment of Kubernetes clusters, freeing customers to concentrate on their applications rather than the underlying infrastructure.

- IBM Red Hat OpenShift. OpenShift is a container application platform for enterprises based on Kubernetes and Docker. It targets fast application development, easier deployment and automation, while also supporting container storage and multi-tenancy.

- Rancher. Rancher by Rancher Labs is an open source container management platform specifically targeted toward organizations that deploy containers in production.

- Mirantis. Mirantis is another example of an open source product ecosystem based on Kubernetes that can be used for IoT. The product is designed to manage IQRF networks and gateways for IoT applications such as smart cities.

What is the history of Kubernetes?

In the past, organizations ran applications on physical servers, with no way to define resource boundaries, leading to resource allocation issues. To address this, virtualization was introduced. This allows multiple VMs to operate at the same time on a single server's CPU. Applications can be isolated between VMs and receive increased security because they can't be readily accessed by others.

Containers resemble VMs but with relaxed isolation properties. Just like a VM, a container has a file system, CPU, memory, process space and other properties. Containers can be created, deployed and integrated quickly across diverse environments.

Kubernetes, created by Google and released in 2015, was inspired by the company's internal data center management software called Borg. Since then, Kubernetes has attracted major contributors from various corners of the container industry. The Cloud Native Computing Foundation (CNCF) took over hosting Kubernetes in 2018.

Kubernetes is open source, so anyone can contribute to the Kubernetes project via one or more Kubernetes special interest groups. Top corporations that commit code to the project include IBM, Rackspace and Red Hat. IT vendors have developed support and integrations for the management platform, while community members attempt to fill gaps among vendor integration with open source tools.

Kubernetes adopters range from cloud-based document management services to telecom giant Comcast, financial services conglomerate Fidelity Investments and enterprises including SAP Concur and Tesla.

What is the future for Kubernetes?

According to Semantic versioning, Kubernetes versions are expressed as x.y.z, where x is the major version, y is the minor version and z is the patch version. The latest minor version of Kubernetes is 1.28, which was released in November 2023. Kubernetes updates in 2019 -- versions 1.14 through 1.16 -- were major releases that added or improved several areas to further support stability and production deployment. These include the following:

- Support for Windows host and Windows-based Kubernetes nodes.

- Extensibility and cluster lifecycle.

- Volume and metrics.

- Custom resource definitions.

Since then, industry interest has shifted away from updates to the core Kubernetes platform and more toward higher-level areas where enterprises can benefit from container orchestration and cloud-native applications. These include sensitive workloads that require multi-tenant security, more fluid management of stateful applications such as databases, and fostering GitOps version-controlled automated releases of applications and software-defined infrastructure.

As organizations expand container deployment and orchestration for more workloads in production, it becomes even harder to know what's going on behind the scenes. This increases the need to better monitor various layers of the Kubernetes stack, and the entire Kubernetes platform, for performance and security. Markets to serve these emerging areas with third-party tools have already formed, with startups -- some through the CNCF -- as well as experienced vendors. At the same time, the Kubernetes ecosystem continues to consist of dozens of Kubernetes distributions and vendors, which will probably narrow in the future.

While it's challenging to predict the exact trajectory of Kubernetes, the following trends are expected to grow:

- Serverless Kubernetes. Kubernetes is expected to continue to integrate with serverless frameworks such as Knative, letting customers run serverless workloads on Kubernetes clusters.

- Machine learning. Although Kubernetes is already being used to manage machine learning workloads, there's room for improvement, such as through integration with well-known machine learning frameworks or the addition of more specialized features.

- Edge computing. Workload management at the edge -- where devices are connected to the cloud -- is another application for Kubernetes. Kubernetes can play a significant role in workload management in these distributed systems as edge computing grows.

- Multi-cloud and hybrid cloud deployments. The importance of Kubernetes in efficiently managing workloads across diverse cloud environments will only grow as more companies embrace multi-cloud and hybrid cloud strategies.

While Kubernetes is frequently set up as a cluster on a single cloud, opting for a multi-cloud cluster presents several advantages. Explore the process of building a multi-cloud Kubernetes cluster.