carloscastilla - Fotolia

What are cloud containers and how do they work?

Containers in cloud computing have evolved from a security buzzword. Deployment of cloud containers is now an essential element of IT infrastructure protection.

Cloud containers remain a hot topic in the IT world in general, especially in security. The world's top technology companies, including Microsoft, Google and Facebook, all use them. For example, Google said everything it has runs in containers, and that it runs several billion containers each week.

Containers have seen increased use in production environments over the past decade. They continue the modularization of DevOps, enabling developers to adjust separate features without affecting the entire application. Containers promise a streamlined, easy-to-deploy and secure method of implementing specific infrastructure requirements and are a lightweight alternative to VMs.

How do cloud containers work?

Container technology has roots in partitioning and chroot process isolation developed as part of Linux. The modern forms of containers are expressed in application containerization, such as Docker, and in system containerization, such as Linux Containers (LXC). Both enable an IT team to abstract application code from the underlying infrastructure to simplify version management and enable portability across various deployment environments.

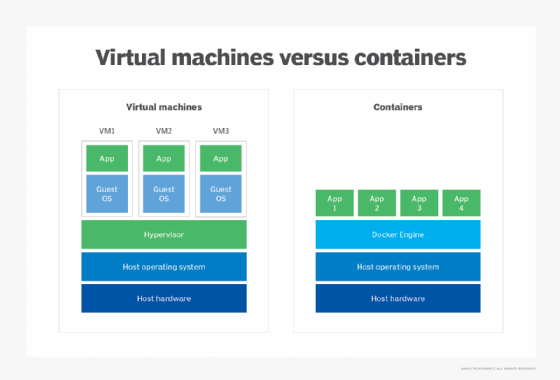

Containers rely on virtual isolation to deploy and run applications that access a shared OS kernel without the need for VMs. Containers hold all the necessary components, such as files, libraries and environment variables, to run desired software without worrying about platform compatibility. The host OS constrains the container's access to physical resources so a single container cannot consume all of a host's physical resources.

The key thing to recognize with cloud containers is that they are designed to virtualize a single application. For example, you have a MySQL container, and that's all it does -- it provides a virtual instance of that application. Containers create an isolation boundary at the application level rather than at the server level. This isolation means that, if anything goes wrong in that single container -- for example, excessive consumption of resources by a process -- it only affects that individual container and not the whole VM or whole server. It also eliminates compatibility problems between containerized applications that reside on the same OS.

Major cloud vendors offer containers-as-a-service products, including Amazon Elastic Container Service, AWS Fargate, Google Kubernetes Engine, Microsoft Azure Container Instances, Azure Kubernetes Service and IBM Cloud Kubernetes Service. Containers can also be deployed on public or private cloud infrastructure without the use of dedicated products from a cloud vendor.

There are still key questions that need answers: How exactly do containers differ from traditional hypervisor-based VMs? And, just because containers are so popular, does it mean they are better?

Cloud containers vs. VMs

The key differentiator with containers is the minimalist nature of their deployment. Unlike VMs, they don't need a full OS to be installed within the container, and they don't need a virtual copy of the host server's hardware. Containers can operate with a minimum amount of resources to perform the task they were designed for -- this can mean just a few pieces of software, libraries and the basics of an OS. This results in being able to deploy two to three times as many containers on a server than VMs, and they can be spun up much faster than VMs.

Cloud containers are also portable. Once a container has been created, it can easily be deployed to different servers. From a software lifecycle perspective, this is great, as containers can quickly be copied to create environments for development, testing, integration and production. From a software and security testing perspective, this is advantageous because it ensures the underlying OS is not causing a difference in the test results. Containers also offer a more dynamic environment as IT can scale up and down more quickly based on demand, keeping resources in check.

One downside of containers is the problem of splitting your virtualization into lots of smaller chunks. When there are just a few containers involved, it's an advantage because you know exactly what configuration you're deploying and where. However, if you fully invest in containers, it's quite possible to soon have so many containers that they become difficult to manage. Just imagine deploying patches to hundreds of different containers. If a specific library needs updating inside a container because of a security vulnerability, do you have an easy way to do this? Problems of container management are a common complaint, even with container management systems, such as Docker, that aim to provide easier orchestration for IT.

Containers are deployed in two ways: either by creating an image to run in a container or by downloading a pre-created image, such as from Docker Hub. Although Docker is by far the largest and most popular container platform, there are alternatives. However, Docker has become synonymous with containerization. Originally built on LXC, Docker has become the predominant force in the world of containers.

Cloud container security

Once cloud containers became popular, the focus turned to how to keep them secure. Docker containers used to have to run as a privileged user on the underlying OS, which meant that, if key parts of the container were compromised, root or administrator access could potentially be obtained on the underlying OS, or vice versa. Docker now supports user namespaces, which enable containers to be run as specific users.

A second option to minimize access issues is to deploy rootless containers. These containers add an additional security layer because they do not require root privileges. Therefore, if a rootless container is compromised, the attacker will not gain root access. Another benefit of rootless containers is that different users can run containers on the same endpoint. Docker currently supports rootless containers, but Kubernetes does not.

Another issue is the security of images downloaded from Docker Hub. By downloading a community-developed image, the security of a container cannot necessarily be guaranteed. Docker addressed this starting in version 1.8 with a feature called Docker Content Trust, which verifies the publisher of the image. Images can also be scanned for vulnerabilities. This goes some way toward providing assurance, but its verification processes may not be thorough enough if you are using containers for particularly sensitive applications. In this case, it would be sensible to create the image yourself to ensure your security policies have been enforced and updates are made regularly.

Note, however, that company-made images are only as secure as your employees make them. Proper training for those creating images is critical.

Containers for sensitive production applications should be treated in the same way as any other deployment when it comes to security. Inside the container is software that may have vulnerabilities. Although a compromised container might not grant access to the underlying server OS, there still may be issues such as denial of service, for example, that could disable a MySQL container and, therefore, knock a website offline.

Make sure team members understand that containers don't provide as much isolation as VMs. While containers do offer segmentation from the rest of the endpoint, they are rarely hardened to the point of providing as much isolation as VMs. With VMs, users can open attachments and untrusted applications to test their safety. However, malware developers have gotten smarter, developing varieties of malware that can delay calling home or detect if it's in a virtualized environment.

Regardless, if a container starts acting oddly or consuming more resources than necessary, it's easy enough to shut it down and restart it. And, while not a true sandbox experience, containers provide a way to keep untrusted applications separate and unaware of other applications on the endpoint. The Linux kernel also features secure computing mode (seccomp). An application sandboxing method, seccomp monitors and restricts system calls in container processes. If malicious software infiltrates the container, it could, therefore, only make limited system calls.

As always, ensure team members prioritize security threats and vulnerabilities. Follow container security best practices, and be aware of container security vulnerabilities and attacks. Proper deployment and management are key. Regular container scanning to ensure images and active containers remain updated and secure is also important.

In addition, do not forget the security of the server hosting the containers. Docker has some great advice on its website for securing containers.

Lastly, the important thing to remember is that, just because containers are a newer type of technology, it doesn't mean that traditional security policies and procedures shouldn't be applied to them.

The usefulness of cloud containers

There aren't many organizations that won't benefit from introducing cloud containers to their infrastructure. Their portability, both internally and in the cloud, coupled with their low cost, makes them a great alternative to full-blown VMs.

However, it's not necessarily an either-or decision. It will be the case that, in most companies, there is a need for both containers and VMs. Each has its own strong points and weaknesses, and the two can complement each other rather than compete.