Chef (software)

Chef is an open source systems management and cloud infrastructure automation platform. Opscode created the Chef configuration management tool, and the company later changed its name to Chef.

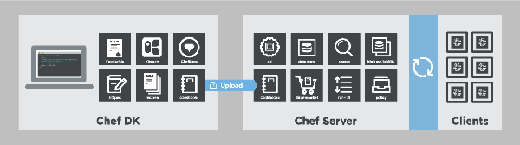

Chef transforms infrastructure into code to automate server deployment and management. A DevOps professional installs the Chef Development Kit (Chef DK) on a workstation to define components and interact with the Chef Server.

Chef can manage a variety of node types, including servers, cloud virtual machines, network devices and containers. It manages Linux, Windows, mainframe and several other systems. The tool is intended to enable developers and IT operations professionals to work together to deploy applications on IT infrastructure.

How Chef works

The Chef DK, a software development kit, includes:

- Test Kitchen, ChefSpec, Cookstyle and Foodcritic for testing;

- the chef-client agent that Chef Server uses to communicate with managed nodes;

- Ohai, a tool that detects common system details;

- Chef and Knife command-line tools; and

- the InSpec auditing framework.

Chef uses code packages called recipes, compiled into cookbooks, to define how to configure each managed node. A recipe describes the state a resource should be in at any given time. Chef compiles recipes inside cookbooks along with dependencies and necessary files, such as attributes, libraries and metadata, to support a particular configuration.

Chef is an agent-based tool wherein chef-client pulls the configuration information for the managed node from Chef Server. The chef-client installs on every node to execute the actual configuration, and it uses the Ruby programming language.

Agent-based configuration management pulls information from a central repository, Chef Server, in a model that overcomes poor network connectivity and enables flexible update rollouts. Policy modification and policy implementation are separate. Chef Server runs on any major distribution of Linux.

A user can share cookbooks on GitHub or in the Chef Supermarket, both of which enable other Chef users to use and manage their own versions of those cookbooks.

An administrator can define operational processes with the policy feature in the Chef Server. The admin can use policy to define server types, identify environment stages, map data types and specify cookbook details. InSpec also provides a way for IT organizations to discover what is currently deployed and how, which helps security professionals ensure that deployments comply with regulations.

Chef also offers tools such as Chef Analytics, Chef Backend, Chef Compliance and Chef Manage.

Chef software options

While Chef is an open source framework, the company Chef sells a packaged platform for enterprise needs in Chef Automate, and it is priced per node.

Chef Automate provides a slate of workflow, visibility and governance capabilities. The platform enables an enterprise to manage application and cookbook deployments across clusters of nodes. Chef Automate also includes compliance controls and other features that facilitate automation.

Amazon Web Services (AWS) also provides AWS OpsWorks for Chef Automate. This AWS-native implementation includes a managed Chef server and automation tools that integrate with AWS' public cloud services. To use Chef with other cloud providers, look for the tool in the company's marketplace images. Chef's original SaaS managed cloud service offering, Hosted Chef, is not as commonly used as these other options.

A business can also run the open source version of Chef, a free download that an IT staff configures and manages locally.

Chef vs. Puppet, Ansible and Salt

Like Chef, Puppet is an open source configuration management platform based on Ruby. Puppet released the initial version of its software in 2005, while Opscode unveiled Chef in 2009. Salt and Ansible joined the field in 2011 and 2012, respectively.

Chef's focus is to apply software development best practices to IT operations and, therefore, it highlights testing discipline as a differentiating factor between competitors. For example, a Chef user can test their deployment on a laptop or VM environment before it goes to a live production infrastructure.

Puppet varies from Chef in a variety of ways. For example, Puppet includes a domain-specific language and reports on nodes in its dashboard. Chef also relies on a domain-specific language, which the company states is accessible and understandable.

Chef users commonly work with recipes through an integrated development environment. Chef is declarative, meaning the user must describe the state they desire rather than writing instructions for how to achieve that state. A system administrator might gravitate toward Puppet, while a developer might use Chef.

Salt and Ansible use YAML for configuration input, a format that is designed for easy human interpretation and creation. Salt also relies on the widely known Python general purpose programming language.

Ansible is agentless, while Salt primarily uses agents, and Chef and Puppet are based on agents, as well. All four configuration management technologies have the option of free or paid enterprise versions.

Chef and containers

Container-based application deployment, via tools such as Docker and Kubernetes, can take place without the aid of configuration management tools. While container-based and serverless apps are in the minority, they could become the standard way to run workloads in the future, and, in that case, configuration management would become irrelevant.

Chef offers a tool named Habitat that targets software developers. It enables app portability across technology generations. The tool aims to eliminate time spent by developers rewriting the tooling to package and deploy application code, situating it within the container movement.